Cloudflare, please don't vibe code your own crypto

The hidden security risks of AI-generated code

Founder & CTO

Large Language Models (LLMs) have introduced capabilities for accelerated code generation beyond imagination.

Scaffolding complex software such as the workers-oauth-provider within Cloudflare's MCP framework is a “matter of days” now. While the velocity of initial development achieved through these models was unimaginable three years ago, an examination of the resultant security-critical code reveals significant concerns.

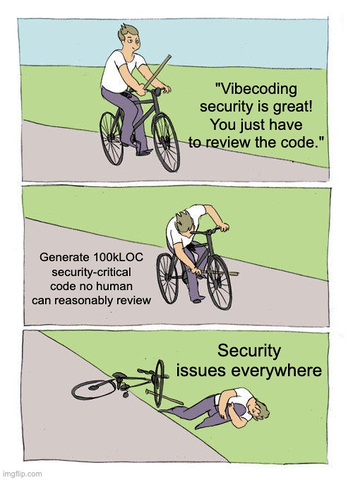

The established cryptographic principle, "don't roll your own crypto," now finds a modern and relevant equivalent: "don't vibe code your own crypto." The allure of rapid development, can lead to the introduction of substantial and often nuanced vulnerabilities into foundational systems.

The speed at which code is generated comes at the cost of “mental load” for experts, such as open source maintainers or overworked senior technical staff, who have to build strong mental models and deep understanding of the generated code, only for it to change significantly after the next prompt. Examining this specific example still surfaces fundamental design flaws in the implementation, even though it has been vetted by Cloudflare security experts according to the README – people we believe to be in the top 1% of security experts in the industry.

Where the code stumbles on security

A detailed look into the workers-oauth-provider code reveals several areas where fundamental security practices appear to have been misapplied or overlooked. These are not just academic concerns — they represent tangible risks.

Client secret handling needs an upgrade

The provider uses SHA-256 to hash client secrets. For machine-generated secrets, which are often long, random strings, SHA-256 alone might seem adequate as the risk of pre-computation or simple dictionary attacks is lower. But relying solely on SHA-256 for its storage provides insufficient protection against dedicated offline cracking attempts to recover the original secret or find collisions. A simple equality check (providedSecretHash !== clientInfo.clientSecret) is also used for comparison, which is susceptible to timing side-channel attacks. The level of damage an attacker can do with a compromised OAuth2 Client always depends on the privileges of that OAuth2 Client. But in security, we prepare for the worst, not for “it’s fine”.

A more robust approach, providing a better balance between security and performance for storing these secrets, would be to use a key derivation function like PBKDF2-SHA256. This adds a configurable work factor (iterations) that significantly increases the cost and time required for an attacker to brute-force the secret, even if they obtain the hash.

The static wrapping key (—) a public secret offers little protection

The mechanism for protecting sensitive props data associated with tokens relies on key wrapping. An HMAC-SHA key (WRAPPING_KEY_HMAC_KEY) is used to derive a per-token wrapping key, which then protects the actual data encryption key for that token's props. The critical flaw here is that this WRAPPING_KEY_HMAC_KEY is hardcoded directly into the library's source code (oauth-provider.ts). Since the library code is public, this "secret" key is effectively leaked to the world.

The impact of this is somewhat mitigated because each individual token's props are encrypted with a unique data encryption key, derived in part from the unique token string itself. This means the compromise of the static HMAC key doesn't immediately expose all data for all tokens.

However, the problem remains significant: an attacker who obtains a raw access token string (from logs, a compromised client, or a network breach) can now easily decrypt the associated props. They simply take the leaked raw token string and, using the publicly known static WRAPPING_KEY_HMAC_KEY, perform the same derivation steps as the server to get the per-token wrapping key. With this, they can unwrap the actual data encryption key and decrypt the props.

If the WRAPPING_KEY_HMAC_KEY were a true, non-static server-side secret (e.g., loaded from a secure environment variable and unknown to the public), an attacker would need to compromise both the raw access token string and this server-side secret HMAC key. By hardcoding it, the defense is weakened; the attacker now only needs one piece of the puzzle – the raw token string.

This problem is exacerbated by the fact that the published code is intended for use as a library. No library should ever define static secrets.

Broader design and token lifecycle questions

- Token re-use policies: The OAuth 2.0 Security Best Current Practice (RFC 9700) emphasizes that authorization codes must be strictly single-use. While the provider aims for this, its approach to refresh token rotation includes a grace period where an old refresh token remains valid for a short time even after a new one is issued. This is intended for reliability but deviates from the strictest OAuth 2.1 guidance, which favors immediate invalidation to minimize risk if a refresh token is compromised.

- Data model challenges: Utilizing a basic key/value store with encrypted data blobs for grant and token information can create significant hurdles for future data migrations, schema evolution, or implementing more granular security controls. This can inadvertently lock the system into its initial design, making adaptation difficult.

- Past vulnerabilities (PKCE and

redirect_uri): Although reportedly patched, previous issues like a bypass of the PKCE mechanism (critical for protecting against authorization code interception) and inadequateredirect_urivalidation (which could lead to credential theft) demonstrate the inherent complexities and potential pitfalls in implementing OAuth correctly. These serve as a reminder of the types of critical errors that can arise.

The real cost of cutting corners with AI

This isn't about a single piece of code. It's about a methodology. LLMs can generate code at an astounding pace. But reviewing, validating, and securing that code, especially when it's for something as sensitive as a security protocol, is a meticulous, time-consuming process for human experts. The rapid generation by an LLM can create a "code dump" that then requires disproportionate effort to secure, often by a community that wasn't involved in its creation.

The adage "don't write your own crypto" is well-known. Perhaps it's time to popularize its modern companion: "don't let an AI write your crypto without deep, expert oversight."

The secure path — rely on proven, audited solutions

For foundational security infrastructure like OAuth and OpenID Connect, the most prudent approach is to leverage established, rigorously vetted, and actively maintained open-source solutions. Consider these alternatives:

- Ory Hydra: A certified OpenID Connect provider and OAuth 2.0 server, built with a security-first mindset in Go. It undergoes regular security audits and is trusted in hundreds of production environments and by OpenAI itself.

node-oidc-provider(Node.js): A widely adopted, certified OpenID Provider (OP) library for Node.js, known for its feature completeness and adherence to OpenID Foundation standards.

Why these are a smarter choice:

- Deep expertise: Developed and maintained by specialists in identity and security protocols.

- Rigorous audits & certifications: Many have passed formal third-party security audits and achieved official certifications (e.g., from the OpenID Foundation), offering a higher degree of trust.

- Battle-tested: They are actively used by a multitude of organizations, meaning they've been tested against a wide array of real-world attack vectors and operational scenarios.

- Strong communities & support: Benefit from active communities, receive consistent security patches, and provide comprehensive documentation.

- Mature and evolving designs: Their architectures and data models have been refined over time, incorporating security best practices, and are designed for extensibility and long-term maintainability.

LLMs are undeniably transformative and can greatly assist developers. They can help draft code, offer solutions, and even serve as learning aids. However, for the core, security-defining elements of your infrastructure, they are not yet a replacement for expert human design, painstaking implementation, and continuous scrutiny by security professionals.

Use AI to enhance our capabilities, but not to sidestep the hard work of building genuinely secure systems. When security is paramount, proven, audited, and community-backed solutions are the responsible choice.

Dive into our detailed guide on How to secure your MCP Servers with OAuth2.1 to learn more.

Further reading

How a redirect broke login with Apple for a full day

How Apple broke "Sign in with Apple" with an unannounced and silent redirect

The future of Identity: How Ory and Cockroach Labs are building infrastructure for agentic AI

Ory and Cockroach Labs announce partnership to deliver the distributed identity and access management infrastructure required for modern identity needs and securing AI agents at global scale.